We introduce the first gold-standard financial benchmark for systematically comparing word embeddings using a financial language framework. This benchmark covers seven groups of financial analogies. Each group contains 80 analogies reaching 2660 unique analogies in total for all groups. All financial analogies are developed using the Bureau van Dijk’s Orbis database and are available for download.

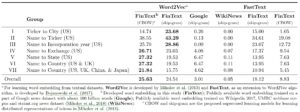

In the table below, the first five groups cover publicly listed US companies, the sixth group mixes US and UK publicly listed companies, and the last group mixes US, UK, China, and Japan publicly listed companies. ‘Ticker’ is the security ticker identifier, ‘Name’ is the full name of the company, ‘City’ is the headquarters location, ‘Exchange’ is the stock exchange where the company’s share is traded, ‘Country’ is the country where headquarters is located, ‘State’ (for US companies) is the state where the headquarters is located, and finally ‘Incorporation year’ is the incorporation year of the company. To generate sufficient challenges, we chose the top 20, 10 and 5 companies from the ‘very large companies’ class for groups I-V, VI and VII, respectively. The permutation of chosen companies in each group generates 380 unique analogies for each group and 2660 analogies in total. The accuracy of each word embedding is reported for each group and all groups (overall).

This table clearly shows that FinText has substantially better performance than all other word embeddings; it is 8 times better than Google Word2Vec, and 512 times better than WikiNews. WikiNews accuracy is lower than 0.1% for all sections, with an overall accuracy of 0.05%. For Google Word2Vec, the overall accuracy is 3.01%.